Summer School Lectures:Basics of Deep Learning

These videos introduce deep learning concepts, covering basics of neural networks, activation functions, optimization, and CNN applications.

In August 2024, I attended the online sessions of a Medical Imaging Deep Learning Summer School, which was offered in both online and offline formats. The content was absolutely mind-blowing, and I’ve been eager to share it with you! To start, here’s the first lecture on the basics of Deep Learning, neatly divided into three parts. Best of all, it’s ad-free and completely free to access. Enjoy!

Basics of Deep Learning - Part 1: Introduction and Core Concepts

The lecture introduces machine learning concepts, focusing on supervised, unsupervised, and reinforcement learning. It explains supervised learning with examples like classification and regression, while unsupervised learning is shown through clustering. A binary classification example highlights real-world applications such as MNIST and medical datasets. It covers challenges in high-dimensional spaces and introduces deep learning with regularization techniques to avoid overfitting. Finally, it discusses model selection and hyperparameter tuning using cross-validation.

Basics of Deep Learning - Part 2: Linear Models and Neural Networks

The video dives deeper into classification, starting with linear classifiers like the perceptron and logistic regression. It explains how neural networks expand on these by adding multiple layers and non-linear activation functions. Techniques such as stochastic gradient descent and loss functions like cross-entropy are used to train models. The document also explores concepts like regularization to prevent overfitting and introduces softmax for multi-class classification. Lastly, it covers key metrics for evaluating model performance, including precision, recall, and the confusion matrix.

Basics of Deep Learning - Part 3: Training and Optimization Techniques

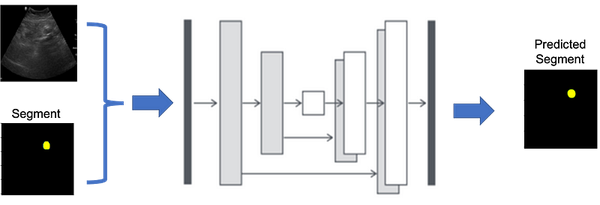

In this video we delves into training neural networks, covering forward pass, backpropagation, and optimization methods like gradient descent. It explores activation functions such as ReLU, Sigmoid, and Tanh, with a focus on their strengths and limitations. The document explains convolutional layers, pooling, and feature maps, providing insights into convolutional neural networks (CNNs) for image classification and segmentation tasks. It highlights the importance of mini-batch processing for efficient training. Lastly, several real-world applications of ConvNets, such as brain MRI segmentation and detection, are discussed.

I will upload next part soon. See you soon. 😃