Can you use Deep Learning for every imaging task regardless of dataset size?

Can you use Deep Learning for every imaging task regardless of dataset size ?

I have been asking myself this question for a long time, and my answer changes every few months. If you had asked me this question a few years back, my answer would have been, ‘It depends on how much data you have.’ Back then it was a general opinion: if you have a sufficiently large dataset (please note the definition of a ‘large dataset’ varies for different deep learning tasks, depending on the complexity of the task), then use deep learning; otherwise, machine learning techniques are better suited. Now, my answer is, ‘It depends on the task.’ I have worked on various medical imaging projects, and here is what I observed:

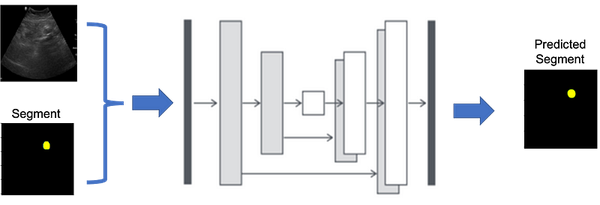

There are two main image tasks: Classification and Segmentation (pixel wise classification). In classification task, the deep learning model classifies the images into different desired groups (as shown in Figure 1). In segmentation task the model classifies the pixels of images into groups this process generally highlights the specific area of an image as shown in Figure 2 below.

Previously, tasks like the ones mentioned required large datasets. Even with transfer learning, it was common to need about 500–700 images and masks for medical imaging datasets in segmentation tasks. However, the landscape has significantly transformed.

The recent introduction of open-source models such as YOLOv8 (for segmentation and classification), SAM (Segment Anything Model for segmentation), and DINOV2 (Encoder for segmentation and classification), has made it possible to train a segmentation model with just 100 images and masks. To demonstrate this, I published a paper in this year’s ICCV workshop titled ‘Comprehensive Multimodal Segmentation in Medical Imaging: Combining YOLOv8 with SAM and HQ-SAM Models’. This paper experimentally showcases how combining YOLOv8 and SAM can produce high-quality masks. While I have limited experience with DINOV2, but based on my colleagues’ findings it may effectively generalize on small, unknown datasets.

In summary, it is now feasible to utilize deep learning models for imaging tasks, even with a limited dataset.

These are my opinions and experiences and I would love to know yours. Please let me know in comments.