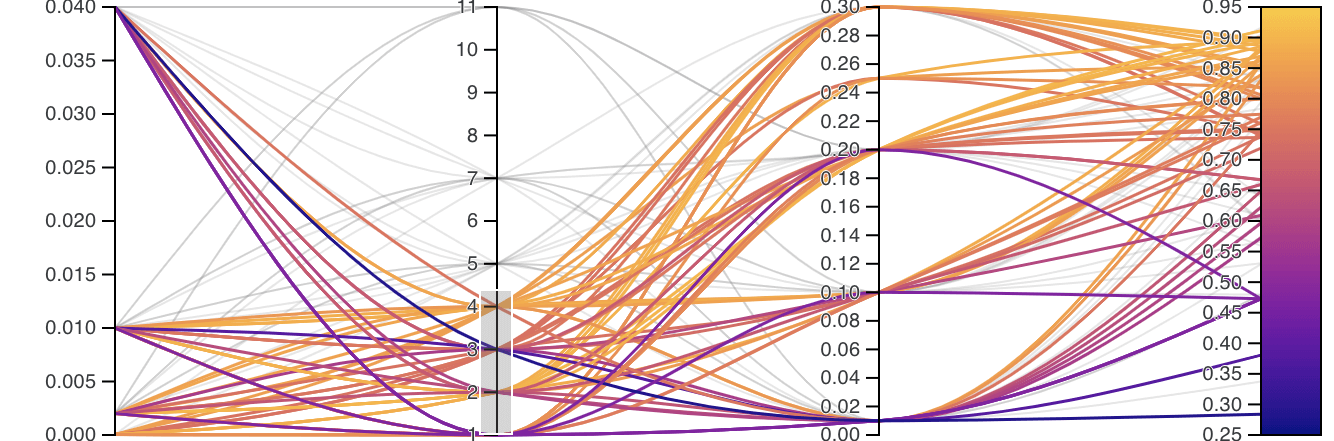

ML model: Fine Tune Hyperparameters

Tuning hyperparameters significantly improves model performance using Grid Search and Cross-Validation

Hyperparameters is a crucial step in improving the performance of a machine learning model. For Random Forest, some key hyperparameters to tune include the number of trees (n_estimators), the maximum depth of the trees (max_depth), the minimum number of samples required to split an internal node (min_samples_split), and the minimum number of samples required to be at a leaf node (min_samples_leaf).

There are several methods to tune hyperparameters, including Grid Search, Random Search, and Bayesian Optimization. In this tutorial, we'll use Grid Search with Cross-Validation to find the optimal hyperparameters for our Random Forest model.

Parameter Grid:

We define a dictionary (param_grid) with the hyperparameters we want to tune and the values to try for each.

from sklearn.model_selection import GridSearchCV

# Define the parameter grid

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 10, 20, 30],

'min_samples_split': [2, 5, 10],

'min_samples_leaf': [1, 2, 4]

}Initialize Grid Search:

We create a GridSearchCV object with the Random Forest regressor, parameter grid, cross-validation settings (cv=5 for 5-fold cross-validation), and scoring method.

# Initialize the Random Forest regressor

rf = RandomForestRegressor(random_state=42)

# Initialize Grid Search with Cross-Validation

grid_search = GridSearchCV(estimator=rf, param_grid=param_grid, cv=5, n_jobs=-1, verbose=2, scoring='neg_mean_absolute_error')Fit the Model:

The grid search iterates over all possible combinations of the hyperparameters, fits the model, and evaluates it using cross-validation.

# Fit the model

grid_search.fit(X_train, y_train)Best Parameters and Score:

We retrieve the best hyperparameters and the corresponding score.

# Get the best parameters and best score

best_params = grid_search.best_params_

best_score = grid_search.best_score_

print(f'Best Parameters: {best_params}')

print(f'Best Cross-Validation Score: {best_score}')Train with Best Parameters:

We train a new Random Forest model using the best hyperparameters and evaluate its performance on the test set.

# Train the model with the best parameters

best_rf = RandomForestRegressor(**best_params, random_state=42)

best_rf.fit(X_train, y_train)

# Make predictions on the test set

y_pred_best = best_rf.predict(X_test)

# Evaluate the model

mae_best = mean_absolute_error(y_test, y_pred_best)

r2_best = r2_score(y_test, y_pred_best)

print(f'Mean Absolute Error with Best Params: {mae_best}')

print(f'R-squared with Best Params: {r2_best}')Hyperparameter tuning can significantly improve the performance of your model by finding the optimal settings for the specific dataset and task.